Your cart is currently empty!

The Origins and Evolution of AI

Back to: MAIS AI Essentials

AI in Ancient History

The origins of artificial intelligence can be traced back to humanity’s earliest attempts to understand and replicate intelligence. Though the technology we associate with AI didn’t exist, the concept of creating artificial beings or systems capable of human-like thought has captivated cultures and philosophers for centuries.

Greek Mythology

Greek mythology provides some of the earliest examples of artificial beings. Talos, a giant automaton made by the god Hephaestus, was crafted to protect the island of Crete. Powered by divine ingenuity, Talos symbolizes the idea of creating machines with specific tasks to assist or protect humans. Similarly, the myth of Pygmalion tells the story of a sculptor whose statue, Galatea, was brought to life by the goddess Aphrodite, reflecting the deep-seated human desire to imbue inanimate objects with life and intelligence.

These myths not only demonstrate humanity’s fascination with artificial life but also highlight the moral and ethical dilemmas that arise when humans create beings that blur the line between the natural and the artificial.

Philosophical Foundations

Beyond mythology, ancient philosophers laid the groundwork for understanding intelligence and reasoning. Aristotle’s work in logic, particularly syllogistic reasoning, formalized methods of drawing conclusions from premises, a fundamental concept in AI and computer science. His influence on formal reasoning provided a framework that modern AI systems, such as rule-based algorithms, still draw upon.

The philosopher’s exploration of “thinking” and “learning” processes posed questions that are remarkably similar to those tackled in AI today:

- What does it mean to think logically?

- How can we replicate reasoning in a structured and repeatable way?

Eastern Contributions

In addition to Western traditions, early Chinese and Indian philosophies also explored ideas about artificial beings. Ancient Chinese texts, like the writings of Mozi, discuss mechanical creations, such as automated puppets and devices. These early examples reflect a curiosity about combining craftsmanship with intelligence to create autonomous systems.

The Legacy of Ancient Ideas

These myths and philosophies did more than capture the imagination; they formed the cultural and intellectual foundation for the scientific pursuits that would follow. By combining storytelling, ethics, and logic, ancient civilizations shaped the questions and aspirations that would eventually lead to the development of AI as a formal discipline.

Understanding this ancient history provides a deeper appreciation for AI’s evolution and its enduring presence in human thought, from mythical guardians to modern intelligent systems.

The Foundations of Modern AI (1940s-1950s)

The 20th century marked a turning point for Artificial Intelligence, as theoretical concepts began to be explored through mathematics and engineering. This period laid the scientific groundwork for AI as we know it today.

Early Theories and Mathematical Models

The journey toward modern AI began in the 1940s when researchers started developing theoretical models to mimic human cognitive processes.

- 1943: Warren McCulloch and Walter Pitts created the first mathematical model for neural networks. Their model was based on the workings of biological neurons and demonstrated how logical functions could be represented in networks. This innovation introduced the concept that machines could simulate simple thought processes, inspiring decades of neural network research.

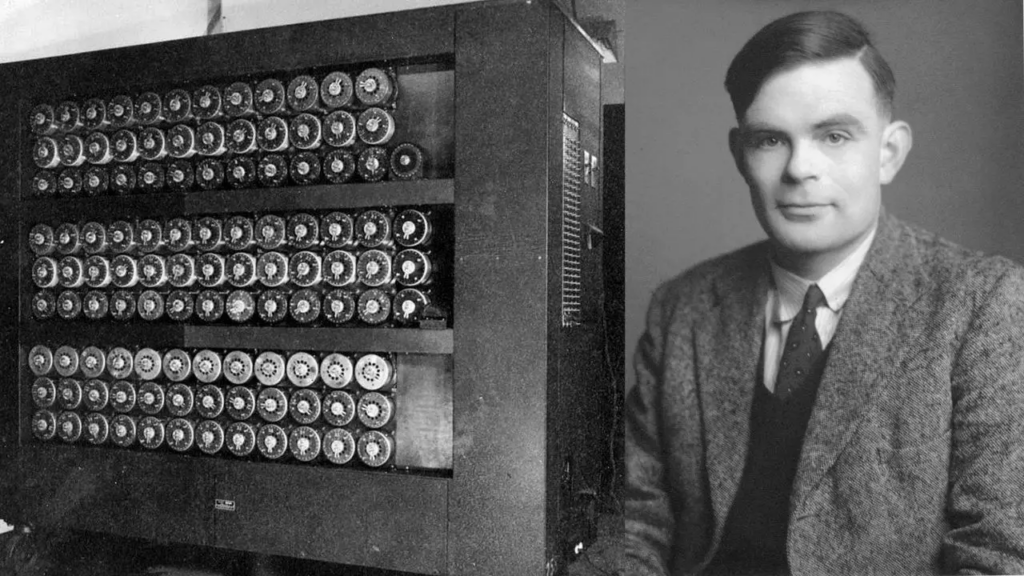

The Visionary Work of Alan Turing

Alan Turing, one of the most influential figures in the development of AI, played a pivotal role during this era.

- 1950: Turing proposed the now-famous Turing Test in his paper “Computing Machinery and Intelligence.” This test set a benchmark for determining machine intelligence: if a machine could engage in a conversation indistinguishable from a human, it would be considered intelligent.

- Turing also introduced the idea of a “universal machine” capable of performing any computation given the right instructions—a foundational concept in computer science.

The Dartmouth Conference: The Birth of AI as a Field

In 1956, the Dartmouth Conference brought together some of the brightest minds in mathematics, engineering, and psychology to formally discuss the possibilities of creating intelligent machines. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the conference was groundbreaking for two reasons:

- It was here that the term “Artificial Intelligence” was coined by John McCarthy, defining a new field of study.

- Researchers presented ambitious proposals to develop machines capable of learning, reasoning, and solving problems, setting the stage for decades of exploration.

A Field is Born

The work of these pioneers laid the foundational principles of AI:

- Machines can emulate human thought through logic and computation.

- Neural networks can process information in ways inspired by biological systems.

- Collaboration across disciplines is essential to advance the field.

These early developments were monumental in transforming philosophical musings about intelligence into a scientific endeavor. Understanding this period helps contextualize the ambitious goals and subsequent challenges AI faced in the years that followed.

Growth and Challenges (1950s-1970s)

After its initial breakthroughs in the 1940s and 1950s, Artificial Intelligence experienced a period of rapid growth and experimentation. Researchers developed programs and systems that demonstrated the potential of machines to perform tasks like problem-solving and language processing. However, this era also highlighted the challenges and limitations of AI, leading to setbacks that would shape the future of the field.

Early Programs and Innovations

The 1950s and 1960s saw the creation of programs that showcased AI’s ability to simulate human thought processes:

- Logic Theorist (1955-1956): Developed by Allen Newell and Herbert A. Simon, this program was capable of proving mathematical theorems. It was one of the first demonstrations that machines could replicate logical reasoning.

- ELIZA (1966): Created by Joseph Weizenbaum, ELIZA was an early natural language processing program designed to simulate a conversation with a psychotherapist. Though simple by today’s standards, it highlighted the potential for machines to interact with humans through language.

These programs proved that AI could replicate specific human skills, sparking excitement about its possibilities.

The Rise of Symbolic AI

During this period, much of AI research focused on symbolic AI, also known as “Good Old-Fashioned AI” (GOFAI).

- Symbolic AI used logic and predefined rules to mimic human reasoning.

- Researchers believed that if they could codify knowledge into rules, machines could reason and solve problems like humans.

While symbolic AI achieved some success, its reliance on predefined rules limited its ability to handle ambiguous or complex real-world scenarios.

The First AI Winter (1970s)

The excitement surrounding AI in the 1950s and 1960s led to overly ambitious predictions that machines would achieve human-level intelligence within a few decades. When these expectations failed to materialize, funding for AI research was drastically reduced.

Key Challenges:

- Limited computing power hindered the ability to process complex algorithms.

- AI systems struggled with tasks that required understanding context or handling incomplete data.

- The reliance on symbolic AI revealed fundamental limitations in flexibility and scalability.

This period, known as the First AI Winter, saw many researchers shift focus to more practical applications, such as expert systems and robotics.

Lessons from the Challenges

Despite the setbacks, the work done during this era laid important groundwork for future advancements. Researchers learned that AI would require:

- More sophisticated algorithms capable of adapting to dynamic environments.

- Greater computational power to process complex tasks.

- New approaches beyond rule-based systems, paving the way for machine learning in later decades.

The growth and challenges of this period remind us that progress in AI is not always linear. Setbacks often lead to new perspectives and innovations, a theme that continues to define the field today.

The Shift to Machine Learning and Data-Driven AI (1980s-2010s)

After the challenges of the 1970s, Artificial Intelligence entered a new phase in the 1980s and 1990s, characterized by the rise of expert systems and a shift toward data-driven approaches. These decades marked a departure from purely symbolic AI, as researchers began exploring methods that allowed machines to learn from data rather than relying solely on predefined rules.

The Rise of Expert Systems (1980s)

Expert systems became a dominant force in AI during the 1980s. These systems were designed to emulate the decision-making processes of human experts in specialized fields.

- How They Worked: Expert systems relied on a knowledge base (a collection of rules and facts) and an inference engine to draw conclusions.

- Notable Examples:

- MYCIN: Used in medicine to diagnose bacterial infections and recommend treatments.

- XCON: Helped configure computer systems for customers, saving time and reducing errors.

Expert systems demonstrated AI’s potential for solving real-world problems, particularly in fields like healthcare, engineering, and business. However, their reliance on predefined rules limited their flexibility, and the high cost of development made them impractical for broader use.

The Second AI Winter (Late 1980s)

The commercial success of expert systems initially brought enthusiasm and investment, but limitations soon became apparent:

- Expert systems were difficult to scale and adapt to changing environments.

- Maintaining and updating the knowledge base was time-consuming and expensive.

- The high expectations set by their early success led to disappointment when systems couldn’t deliver broader applications.

As a result, the late 1980s saw another reduction in funding and interest in AI, marking the Second AI Winter.

The Emergence of Machine Learning (1990s-2000s)

The 1990s marked a turning point, as researchers shifted their focus to machine learning (ML)—an approach where machines learn patterns from data rather than relying on hardcoded rules.

Why It Succeeded:

- The availability of larger datasets and more powerful computers made it possible to train algorithms on real-world data.

- Algorithms like support vector machines and decision trees offered more flexibility than rule-based systems.

Key Milestones:

- 1997: IBM’s Deep Blue: This AI system defeated world chess champion Garry Kasparov, showcasing AI’s ability to handle complex strategic tasks.

- 2000s: Image Recognition: Machine learning techniques began revolutionizing fields like computer vision, enabling machines to recognize and classify images with increasing accuracy.

The Deep Learning Revolution (2010s)

The 2010s saw a resurgence of AI, driven by advances in deep learning, a subset of machine learning inspired by the structure and function of the human brain.

- Neural Networks: Deep learning models use artificial neural networks with multiple layers to process data and recognize patterns at an unprecedented level of complexity.

- Notable Achievements:

- Google’s AlphaGo (2016): Defeated world Go champion Lee Sedol, showcasing AI’s ability to master highly complex games.

- Generative Pre-trained Transformers (GPT): OpenAI’s GPT models revolutionized natural language processing, enabling AI to generate coherent and contextually relevant text.

The Impact of Data and Computation

This era emphasized the importance of data and computational power in AI development:

- Large datasets enabled models to learn from diverse examples, improving accuracy and generalizability.

- Advances in graphics processing units (GPUs) accelerated the training of deep learning models, making breakthroughs possible in a fraction of the time.

Lessons Learned

The shift to machine learning and deep learning demonstrated that AI thrives when systems are designed to learn and adapt. This period also set the stage for the generative AI revolution that defines the current era.

AI in the 2020s: The Generative AI Era

The 2020s mark a transformative period in the history of Artificial Intelligence, defined by the rise of generative AI models and their integration into everyday life. These advancements have not only revolutionized industries but also brought AI into the hands of millions of users, reshaping how we interact with technology.

Generative AI: The Game Changer

Generative AI models, such as OpenAI’s GPT series, represent a significant leap in AI capabilities. These models can generate human-like text, images, and even code, demonstrating creativity and utility in unprecedented ways.

- What is Generative AI?

Generative AI refers to systems trained to create new content based on patterns learned from vast datasets. Unlike earlier AI, which primarily analyzed and classified data, generative models produce outputs like stories, artwork, and detailed responses to user queries.

Key Milestones in Generative AI

- 2020: GPT-3

OpenAI released GPT-3, a large-scale language model capable of understanding and generating text with remarkable accuracy and coherence. It became a foundation for AI-powered tools in content creation, customer support, and education. - 2022: ChatGPT

The public launch of ChatGPT introduced conversational AI to millions. By leveraging GPT-3’s architecture, ChatGPT provided accessible, interactive, and practical AI capabilities to businesses and individuals alike. - 2023: ChatGPT Plus

OpenAI introduced a subscription plan offering faster response times and priority access during peak usage, reflecting the growing demand for conversational AI. - 2024: Enhanced Features

Advancements in multi-turn conversations and memory capabilities allowed AI systems to maintain context over longer interactions, making them more effective in tasks like virtual tutoring, personal assistance, and creative collaboration.

Integration into Everyday Life

Generative AI is now embedded in a wide range of tools and platforms:

- Business: AI-powered writing assistants, automated code generators, and customer service bots are streamlining operations.

- Education: AI helps students with personalized learning experiences and educators with lesson planning and assessment.

- Art and Creativity: Tools like DALL-E enable users to create custom images, while ChatGPT assists in brainstorming and content drafting.

The Importance of Ethical and Responsible AI Development

As generative AI becomes more widespread, concerns about its ethical implications have also grown. Issues such as misinformation, bias, and data privacy are at the forefront of discussions about responsible AI use.

- OpenAI and other organizations are working with policymakers and industry leaders to address these challenges, focusing on transparency and fairness in AI systems.

- Developers are also enhancing AI safety measures, such as content filters and user moderation tools, to minimize misuse.

The Future of Generative AI

Generative AI continues to evolve, with future developments likely to include:

- Smarter Systems: AI models that can handle even more complex queries and tasks.

- Better Personalization: Systems tailored to individual users, adapting to their preferences and needs over time.

- Wider Integration: AI becoming a seamless part of industries like healthcare, logistics, and entertainment.

Reflection: Generative AI and You

Think about how generative AI tools have already impacted your life:

- Have you used AI to assist with tasks like writing, designing, or problem-solving?

- What possibilities do you see for generative AI in your field or daily routine?

The 2020s represent an inflection point for AI, where its potential is being realized across multiple domains. By understanding this era, we can better appreciate the advancements shaping our present and future.

Copyright 2024 Mascatello Arts, LLC All Rights Reserved